I apologize in advance for the horrendous pun. Those responsible have been sacked… wait, I’m still here. That didn’t even work. Well, since we’re here, might as well explain what that title was about. I just received a Trident Blade 3D, and decided to put it to the test.

The mere name of Trident should strike fear into the heart of old PC gamers. Very few graphic accelerators could boast worse image quality than the 3DImage 9750 and its older sibling, 9850. So what did Trident choose to do? Well, for example, they made another one. The 9880, also known as Blade 3D, is proof that third time’s the charm. Sorta, anyway.

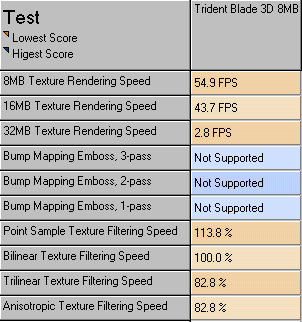

Aside from image quality which was actually pretty good (amazing!), I’m afraid performance is nothing to brag about. It scores decently well on 3DMark 99, after all 2841 is nothing to sneeze at. But real game performance is a bit less impressive. Quake 2 turns out the following results:

640×480: 24.2fps

800×600: 19.6fps

1024×768: 13.9fps

If anything, these results are consistently bad. Now, I should mention that the OpenGL driver was probably the beta-est of betas. In fact, the driver I initially found didn’t even include OpenGL support. And that was one of the latest I could find, if not the latest. It did, however, include an ICD driver that ou had to install separately – by default it would choose the other one. I suspect Trident had no confidence in it either, but coming out in 1999 without OpenGL support was a bad idea, so they had no choice.

Anyway, these numbers are really low. I mean Rage LT Pro low. I mean it’s the lowest 640×480 result so far. It does recover a little bit in 1024×768 (where other cards are slower), so maybe it’s quite dependent on CPU speed. Either way, getting close to an Intel 740 is nothing to brag about. But after all, that was the price point.

Forsaken fares a bit better. Unfortunately the results are vsynced. I tried to disable it, but you know how bad that game is.

640×480: 58.7fps

800×600: 56.3fps

1024×768: 31.4fps

That is actually decent for 800×600. Too bad about the severe drop at the highest resolution. But still, coupled with the good image quality, this would be a win for a budget card. Of course, Forsaken isn’t the heaviest game around, and I guess 8MB was starting to get a little thin by that year, but still. You got what you paid for, and maybe a bit more. Except for good OpenGL drivers.

Trident’s next series, the XP4, would return the company to its old, terrible ways. Success is a fleeting thing, isn’t it? I could probably say the same about S3, but I don’t really know yet: I bought a Savage 4 Pro just a few days ago and I’m still waiting for it to arrive…